First, we need to load two libraries rgdal and raster, if you are using ess in emacs perhaps you must do some additional steps (you can email me). Now, we load our raster file, here, I'll use a Digital Elevation Model (DEM) of my country Peru (DEM_PERÚ.tif). You can download all the files in this link. However, this raster file doesn't match with the real form of my country, so we'll need to crop it, we'll do it later, but we are going to take advantage and load a vectorial shapefile which will be our mask (peru_disolved.shp). I recommend looking at the graphics to be sure that our files are loaded correctly.

# 1. Packages

library(rgdal) # Geospatial Data Abstraction Library

library(raster) # Tools for gridded spatial data

# 2. Read DEM and convert to raster layer object

dem <- raster("~/Downloads/DEM_PERÚ.tif") # raster

vperu <- readOGR("peru_disolved.shp", layer="peru_disolved") # vectorial

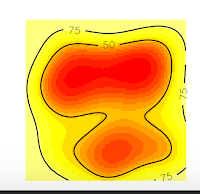

plot(dem) # preliminar plotting

# 3. Generate a reclass matrix

m <- c(-Inf, 250, 1, 250, 500, 2, 500, 1000, 3, 1000, 2000, 4,

2000, 4500, 5, 4500, Inf, 6) # specify dsired levels

rclmat <- matrix(m, ncol = 3, byrow = TRUE)

# 4. Reclassify the raster layer

reclassified_dem <- reclassify(dem, rclmat)

# 5. Plot the results

par(mfrow=c(1, 2))

plot(dem)

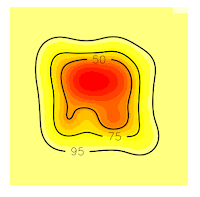

plot(reclassified_dem)

The graphic is weird because we still need to crop it as a sixth step. You can quickly get it using the following code.

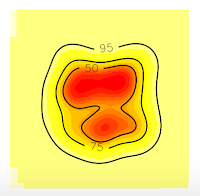

# 6. Cropping

r1 <- crop(reclassified_dem, extent(vperu))

r2 <- mask(r1, vperu)

plot(r2)

If you wish, you can save the files:

# 7. Save new raster

writeRaster(reclassified_dem, "~/Downloads/reclassified_dem.tif", drivername="GTiff",

type = "Float32")

writeRaster(dem_crop, "~/Downloads/dem_crop.tif", drivername="GTiff",

type = "Float32")

All the code is:

# -*- coding: utf-8 -*-

# Author: Irbin B. Llanqui

#"""

# Language: R script

# This is a temporary script file.

#"""

# 1. Packages

library(rgdal) # Geospatial Data Abstraction Library

library(raster) # Tools for gridded spatial data

# 2. Read DEM and convert to raster layer object

dem <- raster("~/Downloads/DEM_PERÚ.tif") # raster

vperu <- readOGR("peru_disolved.shp", layer="peru_disolved") # vectorial

plot(dem) # preliminar plotting

# 3. Generate a reclass matrix

m <- c(-Inf, 250, 1, 250, 500, 2, 500, 1000, 3, 1000, 2000, 4,

2000, 4500, 5, 4500, Inf, 6) # specify dsired levels

rclmat <- matrix(m, ncol = 3, byrow = TRUE)

# 4. Reclassify the raster layer

reclassified_dem <- reclassify(dem, rclmat)

# 5. Plot the results

par(mfrow=c(1, 2))

plot(dem)

plot(reclassified_dem)

# 6. Cropping

r1 <- crop(reclassified_dem, extent(vperu))

r2 <- mask(r1, vperu)

plot(r2)

# 7. Save new raster

writeRaster(reclassified_dem, "~/Downloads/reclassified_dem.tif", drivername="GTiff",

type = "Float32")

writeRaster(dem_crop, "~/Downloads/dem_crop.tif", drivername="GTiff",

type = "Float32")

That's all for today.